Raymii.org

אֶשָּׂא עֵינַי אֶל־הֶהָרִים מֵאַיִן יָבֹא עֶזְרִֽי׃Home | About | All pages | Cluster Status | RSS Feed

Kubernetes (k3s) Ingress for different domains (virtual hosts)

Published: 10-07-2024 20:39 | Author: Remy van Elst | Text only version of this article

❗ This post is over one years old. It may no longer be up to date. Opinions may have changed.

Table of Contents

Now that I have a high-available local kubernetes cluster it's time to learn not just managing the cluster but actually deploying some services on there. Most examples online use a NodePort or a LoadBalancer to expose a service on a port, but I want to have domains, like, grafana.homelab.mydomain.org instead of 192.0.2.50:3000. Back in the old days this was called Virtual Host, using 1 IP for multiple domains. My k3s cluster uses traefik for its incoming traffic and by defining an Ingress we can route a domain to a service (like a ClusterIP). This page will show you how.

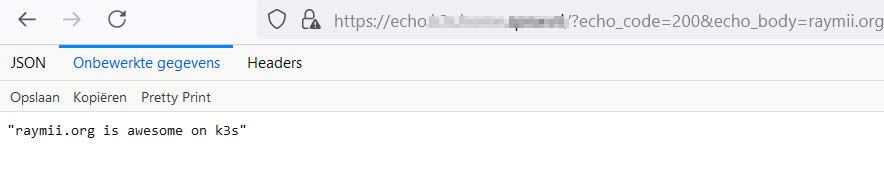

Here's a screenshot of echoapp running on a resolvable actual domain:

The version of Kubernetes/k3s I use

for this article is v1.29.6+k3s1.

Ingress

is already being replaced by the Gateway API

and if using traefik, which k3s does by default, you have more

flexibility with an IngressRoute.

But, as far as I can tell, Gateway API is not really stable yet and for

simplicity's sake I'm using Ingress instead of IngressRoute. If I later

want to swap out traefik for nginx my other stuff should just keep

working.

I assume you have k3s up and running and have kubectl configured on your

local admin workstation. If not, consult my previous high available k3s article

for more info on my specific setup.

DNS Configuration

For this setup to work you must create DNS records pointing to the high available IP of your Kubernetes cluster. I created one regular A record and a wildcard:

dig +short k3s.homelab.mydomain.org

Output:

192.0.2.50

Same for *.k3s.homelab.mydomain.org.

Setup differs per domain provider or if you have your own DNS servers so I'm not showing that here. You could, for local purposes, also put the domain name in your local /etc/hosts file (and on your k3s nodes as well).

Deployment

For the example I'm using a very simple application, the echoserver from Marchandise Rudy.

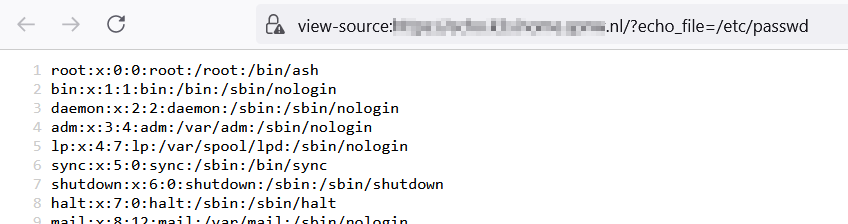

Do note that this app can read arbitrary files and expose them,

so don't run this somewhere that has sensitive data. Appending the

/?echo_file=/ URL parameter allows you to view any file the app has access

to:

The domain name I'm using is echo.homelab.mydomain.org.

Create a folder for the yaml files:

mkdir echoapp

cd echoapp

Create a namespace to keep things tidy:

kubectl create ns echoapp

Create the deployment file:

vim echoapp-deployment.yaml

Contents:

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-deployment

labels:

app: echo

spec:

replicas: 3

selector:

matchLabels:

app: echo

template:

metadata:

labels:

app: echo

spec:

containers:

- name: echo

image: ealen/echo-server:latest

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: "/?echo_code=200"

port: 80

readinessProbe:

httpGet:

path: "/?echo_code=200"

port: 80

---

apiVersion: v1

kind: Service

metadata:

name: echo-service

spec:

ports:

- port: 80

selector:

app: echo

Apply the file:

kubectl -n echoapp apply -f echoapp-deployment.yaml

This is a fairly standard deployment file with a Deployment and a Service.

I've included a livenessProbe and a readynessProbe for fun, but in this

case those don't offer much of value.

In Kubernetes, liveness and readiness probes are used to check the health of your containers.

Liveness Probe: Kubernetes uses liveness probes to know when to restart a container. For instance, if your application had a deadlock and is no longer able to handle requests, restarting the container can make the application more available despite the bug.

Readiness Probe: Kubernetes uses readiness probes to decide when the container is available for accepting traffic. The readiness probe is used to control which pods are used as the backends for services. When a pod is not ready, it is removed from service load balancers.

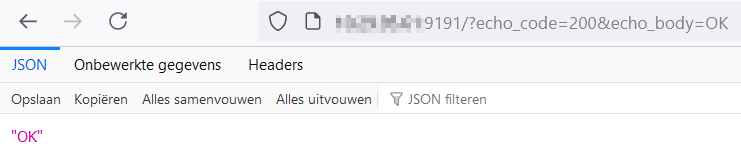

Test the deployment by creating either a NodePort or a LoadBalancer:

kubectl expose service echo-service --type=NodePort --port

9090 --target-port=80 --name=echo-service-np --namespace echoapp

or:

kubectl expose service

echo-service --type=LoadBalancer --port=9191 --target-port=80 --name=echo-service-ext

--namespace echoapp

Get the newly created port/loadbalancer:

kubectl -n echoapp get services

Output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

echo-service ClusterIP 10.43.188.135 <none> 80/TCP 9m5s

echo-service-ext LoadBalancer 10.43.93.211 192.0.2.61,192.0.2.62,192.0.2.63 9191:30704/TCP 29s

echo-service-np NodePort 10.43.10.130 <none> 9090:30564/TCP 77s

Access that ip:port combo in your browser and you should see the app working:

Ingress

To make this deployment available via a hostname and not an ip:port combo

you must create an Ingress

resource.

An Ingress needs apiVersion, kind, metadata and spec fields. The name of an

Ingress object must be a valid DNS (sub)domain name.

Create the file containing your Ingress yaml:

vim echoapp-ingress.yaml

Contents:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: echo-ingress

spec:

rules:

- host: echo.k3s.homelab.mydomain.org

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: echo-service

port:

number: 80

The file contents are fairly simple and speak for themselves, the most important parts are:

host: echo.k3s.homelab.mydomain.org- the DNS domain you want the service to be available on.backend.service.name- must match theServiceresource-

backend.service.port- must match theServiceport

Apply the file:

kubectl -n echoapp apply -f echoapp-ingress.yaml

After a few second you should be able to see your Ingress:

kubectl -n echoapp get ingress

Output:

NAME CLASS HOSTS ADDRESS PORTS AGE

echo-ingress <none> echo.k3s.homelab.mydomain.org 192.0.2.60,192.0.2.61,192.0.2.62,192.0.2.63 80, 443 2d23h

Try to access the domain name in your web-browser, you should see the page right away.

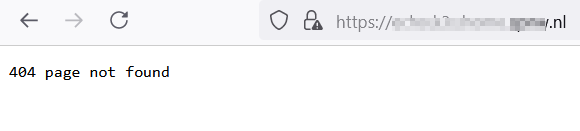

Traefik 503 instead of 404 on if the targeted Service has no endpoints available.

One odd thing I noticed when experimenting with Ingress is if your

configuration is wrong or you try to access a Service which has a failed

Deployment, you'll get an HTTP 404 error. I'd expect a 503, since there is

no server available, not a Not Found error.

When there are no Pods running with the default config:

With the "fixed" config:

To fix this,

in the specific k3s server setup I use, you must create the following file on each k3s server node:

vim /var/lib/rancher/k3s/server/manifests/traefik-config.yaml

Add the following:

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: traefik

namespace: kube-system

spec:

valuesContent: |-

dashboard:

enabled: true

domain: "traefik.k3s.homelab.mydomain.org"

providers:

kubernetesIngress:

allowEmptyServices: true

This edits the default traefik helm chart used by k3s and after systemctl

restart k3s, you should now get a 503 Service Unavailable error instead of

a 404 Not Found error when a deployment failed or no pods are running.

The fact that you have to edit this file on all k3s server nodes is a

bummer, but it's fixable and that's nice.